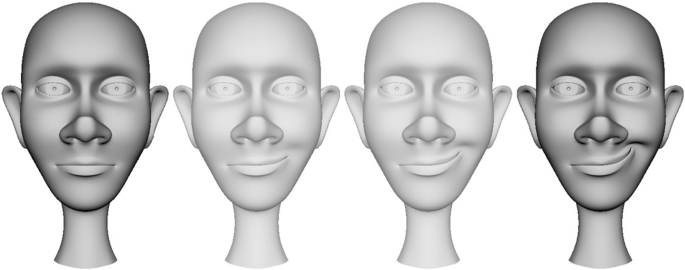

Results show that the use of abstract muscle definition reduces the effect of potential noise in the motion capture data and allows the seamless animation of any virtual anthropomorphic face model with data acquired through human face performance. The expressive power of the system and its ability to recognize new expressions was evaluated on a group of test subjects with respect to the six universally recognized facial expressions. We were so happy about that capability, that we created a free unity plugin to retarget faceshift tracking data onto unity rigs. In this way the automated creation of a personalized 3-D face model and animation system from 3-D data is elucidated. Additionally, a radial basis function mapping approach was used to easily remap motion capture data to any face model. The parametrization of the muscle-based animation encodes a face performance with little bandwidth.

#Faceshift ag update

Muscle inverse kinematics update muscle contraction parameters based on marker motion in order to create a virtual character's expression performance. Tracked markers are then mapped onto a 3-D face model with a virtual muscle animation system.

Stereo web-cameras perform 3-D marker tracking to obtain head rigid motion and the non-rigid motion of expressions.

#Faceshift ag skin

illumination, facial hair, or skin tone variation.

The software uses 3D cameras to identify. It does not require a controlled lab environment (lighting or set-up) and is robust under varying conditions, i.e. faceshift is a real-time markerless facial performance software that enables its users to perform motion capture. The system has low computational requirements, runs on standard hardware, and is portable with minimal set-up time and no training. This work presents a robust, and low-cost framework for real-time marker based 3-D human expression modeling using off-the-shelf stereo web-cameras and inexpensive adhesive markers applied to the face.

0 kommentar(er)

0 kommentar(er)